There are billions of searches taking place on Google every single day. Nearly 15% of them are unique search queries that have been never seen before by Google. Hence, methods have been made to give results for such queries that can’t be foreseen.

While usually searching on Google, we’re aren’t always following the best ways to search. We search for something as we lack knowledge about that particular query and hence may not know the best way to formulate a query.

It’s Google Search who has to figure out what is it exactly you are looking for when you type for something in the search bar and retrieve information relevant to it, irrespective of how you have typed, spelled, or entered your query. Over time, Google has continuously improved the understanding functionality of the language, yet, at times it still doesn’t get it right. That’s one of the reasons why most people use keyword-related search queries over a conversational question.

Thanks to machine learning, Google has made significant upgrades on understanding search queries with their latest advancements from their research team in the science of language. This is the biggest leap Google has taken in the last five years in the history of Search.

Implementing Google Algorithm Update BERT models to Search

Bidirectional Encoder Representations from Transformers (BERT) was introduced last year by Google. It is an open-sourced, neural network-based technique for natural language processing or also known as NLP. BERT enables anyone to teach themselves with their own state-of-the-art question answering system.

This technique was a result of research on transformers: models that can process words correlated to the other words in a sentence, instead of one-by-one order. BERT models can consider the entire context of a word by scanning through the words written before and after in a sentence, especially for understanding the intention behind the search query.

This is not an advancement that was possible through software, new hardware tools were required as well. A couple of models that are built with BERT are so complicated that they push the limits on what can traditional hardware achieve. Cloud TPUs are being used for the first time to provide search results that can get you more relevant information.

Decoding your queries

This is a lot of technical details to digest, so let’s look at how this impacts your search queries. BERT Models helps to find useful information for you as it impacts both the rankings and featured snippets in search. One in ten searches in the U.S. in the English language will be understood better after entering search queries and this will come in other languages over a period of time.

Especially for long & conversational queries or searches where prepositions like “from” and “in” mean a lot in the meaning of the sentence, Search will understand the context of words in the way they are used in your query. You can start searching naturally, the way you want to.

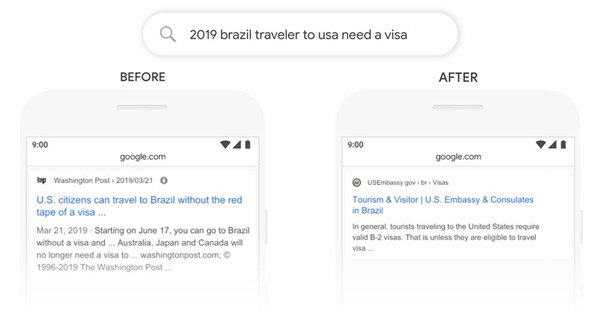

Google has done ample testing to launch these improvements and ensured that the changes truly are different & more useful. Below mentioned is an example that shows the evaluation process that projects BERT’s ability to understand the intention behind your search.

Here is an example of a search query “2019 brazil traveler to the USA needs a visa”. In this search query, the preposition “to” has an important role to play to understand the meaning of the phrase with the other words used in the query. It clearly shows that the search query is about a Brazilian national traveling to the U.S.A., and not the other way round. As you can see below, earlier, google’s algorithms weren’t able to understand the significance of such words in a sentence. The end result was the Search gave us the results about U.S. citizens traveling to Brazil. After implementing BERT, the Search can understand why such a common word matters a lot in this context and can return more relevant results for queries.

Enhancing search in other languages

Currently, Google is applying BERT across the world so that people can Search naturally and get relevant results. One of the most amazing aspects of search systems is that they can take their learnings from one language and implement it for others. We can directly take the models that have learned from the improvements over the period of time in the English language ( most of the content on the web is in English) and start applying to other languages. This will help Google to return results in all the languages Search is offered in.

Keep in touch with Red Berries – A Digital Marketing Company In Dubai to keep yourself updated on emerging issues in the Digital industry. If you have any questions, require any help or you looking for a Digital Marketing Agency Dubai then, contact us today!. Also like us on Facebook, follow us on Twitter and Linkedin for more updates.